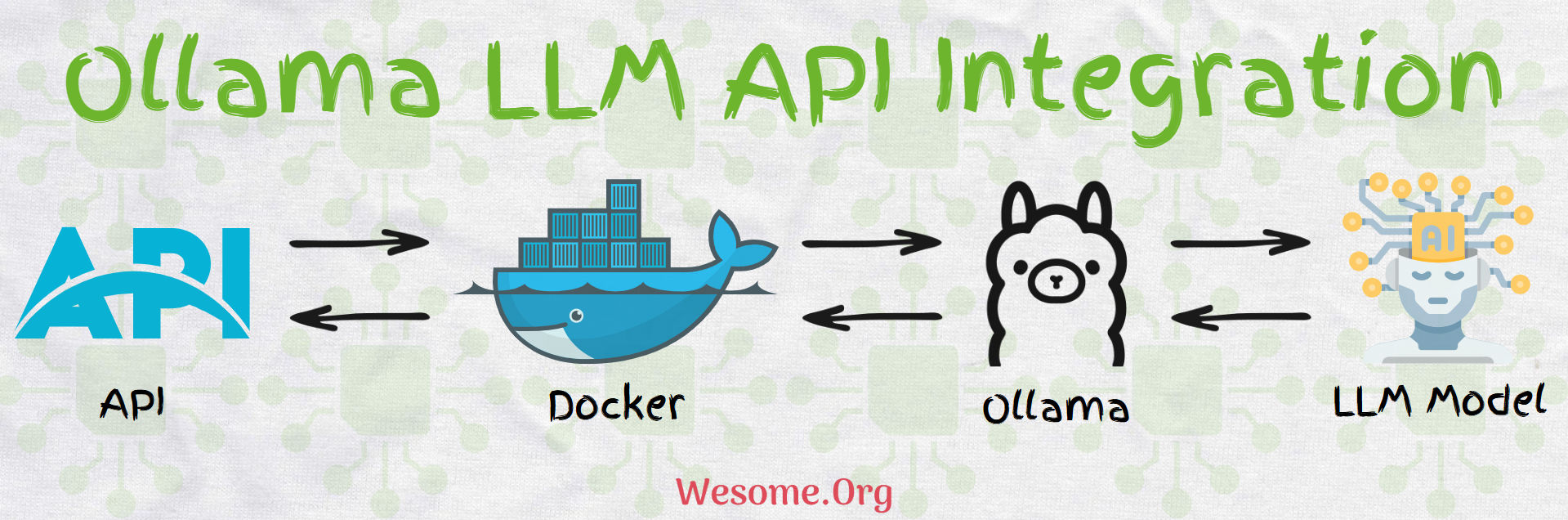

Ollama provides a platform to run LLM or large language Models locally and supports API endpoints for interacting with the Models, the API responds in JSON format and can be expected via Stream as well, let's learn about Ollama LLM API Integration.

The current Ollama model is loaded as Mistral, change as per convenience

The Ollama Models is running inside a Docker container, we have some commands to get the information and current status of the Models in Ollama running on Docker, all the commands will execute on a Docker container.

list all available Ollama models on the docker

docker exec -it ollama_container ollama listshow information about the Ollama mistral model

docker exec -it ollama_container ollama show mistralshow which Ollama model is currently loaded

docker exec -it ollama_container ollama psstop the Ollama model

docker exec -it ollama_container ollama stop mistralshow Ollama model mode file

docker exec -it ollama_container ollama show --modelfile mistralOllama Model API integration

Ollama runs on port 11434. Since it's inside the Docker container, we can expose port 11434 from the Docker container and connect it to the host machine. The Ollama Models supports Rest API integration.

Get the list of models

Ollama supports multiple Models parallel, some might be loaded in memory and some might not.

curl --location 'http://localhost:11434/api/tags'Show model info

Ollama provides in-depth details of each Models such as ModelFile, template, parameters, license, system prompt, etc.

curl --location 'http://localhost:11434/api/show' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral"

}'Show Model Info in Verbose Mode

Ollama accepts an option verbose parameter in the request, if set as true, it will return full in-depth details of the Models.

curl --location 'http://localhost:11434/api/show' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"verbose":true

}'Pull a model

Ollama allows to pull as many Models as required assuming sufficient space and memory is available. if previous pulls were unsuccessful the new pull will resume from there. Ollama allows Models pulling in streaming and non-streaming approaches. default value of the stream parameter is false. hence the response is a single JSON object.

curl --location 'http://localhost:11434/api/pull' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral"

}'Pull Model in Streaming Mode

Ollama Models pull-in streaming mode will return the status of every step.

curl --location 'http://localhost:11434/api/pull' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"stream":true

}'Create Ollama Model

If a Models already exists, ollama allows the creation of a new Models from the ModelFile.

curl --location 'http://localhost:11434/api/create' \

--header 'Content-Type: application/json' \

--data '{

"model": "mario",

"modelfile": "FROM mistral\nSYSTEM You are Mario from Super Mario Bros."

}'Copy a Model

Ollama allows one to copy a Models from an existing Models.

curl --location 'http://localhost:11434/api/copy' \

--header 'Content-Type: application/json' \

--data '{

"source": "mistral",

"destination": "mistral-backup"

}'Delete a Model

Ollama Models can be deleted, but make sure, once deleted, it cannot be undone

curl -L -X DELETE 'http://localhost:11434/api/delete' \

-H 'Content-Type: application/json' \

-d '{

"model": "mistral-backup:latest"

}'A list of running Models

Ollama can run and manage multiple Models simultaneously, the below API will return a list of running Models.

curl --location --request GET 'http://localhost:11434/api/ps' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"input": "Why is the sky blue?"

}'Load the model in memory

To save memory, by default, Ollama keeps a Models in memory for 5 minutes only and unloads it if is unused. an empty prompt with the Models name is enough.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral"

}'it can be confirmed by executing the following command docker exec -it ollama_container ollama ps

Load the model in memory forever

Ollama allows the Models in memory forever

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"keep_alive": -1

}'same can be confirmed by executing the following command docker exec -it ollama_container ollama ps

unload model

By default, Ollama unloads the Models to save memory if not in use, but If the Models is not unloaded automatically, then can be done manually as well. just pass the keep_alive as 0.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"keep_alive": 0

}'The same can be confirmed by executing the following command docker exec -it ollama_container ollama ps

Gernated Response with Ollama Models

Ollama provides generate API, where a request is asked in prompt and the Models will generate the response in streaming or non-streaming mode as per request.

Generate Ollama Model response in streaming mode

Ollama supports Models response in streaming mode, the response will return from the Models in a sequence of characters or words.

curl --location 'http://localhost:11434/api/generate' --header 'Content-Type: application/json' --data '{

"model": "mistral",

"prompt": "Why is the sky blue?"

}'Generate Ollama Model response in non-streaming mode

Ollama supports Models response in non-streaming mode as well, the response will return from the Models in a single response.

curl --location 'http://localhost:11434/api/generate' --header 'Content-Type: application/json' --data '{

"model": "mistral",

"prompt": "Why is the sky blue?",

"stream": false

}'Send Image

Ollama takes base 64 encoding of images as well, making sure the underlying Models supports image format.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"prompt": "What is in this picture?",

"images": [

"iVBORw0KGgoAAAANSUhEUgAAAG0AAABmCAYAAADBPx+VAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAAXNSR0IArs4c6QAAAARnQU1BAACxjwv8YQUAAA3VSURBVHgB7Z27r0zdG8fX743i1bi1ikMoFMQloXRpKFFIqI7LH4BEQ+NWIkjQuSWCRIEoULk0gsK1kCBI0IhrQVT7tz/7zZo888yz1r7MnDl7z5xvsjkzs2fP3uu71nNfa7lkAsm7d++Sffv2JbNmzUqcc8m0adOSzZs3Z+/XES4ZckAWJEGWPiCxjsQNLWmQsWjRIpMseaxcuTKpG/7HP27I8P79e7dq1ars/yL4/v27S0ejqwv+cUOGEGGpKHR37tzJCEpHV9tnT58+dXXCJDdECBE2Ojrqjh071hpNECjx4cMHVycM1Uhbv359B2F79+51586daxN/+pyRkRFXKyRDAqxEp4yMlDDzXG1NPnnyJKkThoK0VFd1ELZu3TrzXKxKfW7dMBQ6bcuWLW2v0VlHjx41z717927ba22U9APcw7Nnz1oGEPeL3m3p2mTAYYnFmMOMXybPPXv2bNIPpFZr1NHn4HMw0KRBjg9NuRw95s8PEcz/6DZELQd/09C9QGq5RsmSRybqkwHGjh07OsJSsYYm3ijPpyHzoiacg35MLdDSIS/O1yM778jOTwYUkKNHWUzUWaOsylE00MyI0fcnOwIdjvtNdW/HZwNLGg+sR1kMepSNJXmIwxBZiG8tDTpEZzKg0GItNsosY8USkxDhD0Rinuiko2gfL/RbiD2LZAjU9zKQJj8RDR0vJBR1/Phx9+PHj9Z7REF4nTZkxzX4LCXHrV271qXkBAPGfP/atWvu/PnzHe4C97F48eIsRLZ9+3a3f/9+87dwP1JxaF7/3r17ba+5l4EcaVo0lj3SBq5kGTJSQmLWMjgYNei2GPT1MuMqGTDEFHzeQSP2wi/jGnkmPJ/nhccs44jvDAxpVcxnq0F6eT8h4ni/iIWpR5lPyA6ETkNXoSukvpJAD3AsXLiwpZs49+fPn5ke4j10TqYvegSfn0OnafC+Tv9ooA/JPkgQysqQNBzagXY55nO/oa1F7qvIPWkRL12WRpMWUvpVDYmxAPehxWSe8ZEXL20sadYIozfmNch4QJPAfeJgW3rNsnzphBKNJM2KKODo1rVOMRYik5ETy3ix4qWNI81qAAirizgMIc+yhTytx0JWZuNI03qsrgWlGtwjoS9XwgUhWGyhUaRZZQNNIEwCiXD16tXcAHUs79co0vSD8rrJCIW98pzvxpAWyyo3HYwqS0+H0BjStClcZJT5coMm6D2LOF8TolGJtK9fvyZpyiC5ePFi9nc/oJU4eiEP0jVoAnHa9wyJycITMP78+eMeP37sXrx44d6+fdt6f82aNdkx1pg9e3Zb5W+RSRE+n+VjksQWifvVaTKFhn5O8my63K8Qabdv33b379/PiAP//vuvW7BggZszZ072/+TJk91YgkafPn166zXB1rQHFvouAWHq9z3SEevSUerqCn2/dDCeta2jxYbr69evk4MHDyY7d+7MjhMnTiTPnz9Pfv/+nfQT2ggpO2dMF8cghuoM7Ygj5iWCqRlGFml0QC/ftGmTmzt3rmsaKDsgBSPh0/8yPeLLBihLkOKJc0jp8H8vUzcxIA1k6QJ/c78tWEyj5P3o4u9+jywNPdJi5rAH9x0KHcl4Hg570eQp3+vHXGyrmEeigzQsQsjavXt38ujRo44LQuDDhw+TW7duRS1HGgMxhNXHgflaNTOsHyKvHK5Ijo2jbFjJBQK9YwFd6RVMzfgRBmEfP37suBBm/p49e1qjEP2mwTViNRo0VJWH1deMXcNK08uUjVUu7s/zRaL+oLNxz1bpANco4npUgX4G2eFbpDFyQoQxojBCpEGSytmOH8qrH5Q9vuzD6ofQylkCUmh8DBAr+q8JCyVNtWQIidKQE9wNtLSQnS4jDSsxNHogzFuQBw4cyM61UKVsjfr3ooBkPSqqQHesUPWVtzi9/vQi1T+rJj7WiTz4Pt/l3LxUkr5P2VYZaZ4URpsE+st/dujQoaBBYokbrz/8TJNQYLSonrPS9kUaSkPeZyj1AWSj+d+VBoy1pIWVNed8P0Ll/ee5HdGRhrHhR5GGN0r4LGZBaj8oFDJitBTJzIZgFcmU0Y8ytWMZMzJOaXUSrUs5RxKnrxmbb5YXO9VGUhtpXldhEUogFr3IzIsvlpmdosVcGVGXFWp2oU9kLFL3dEkSz6NHEY1sjSRdIuDFWEhd8KxFqsRi1uM/nz9/zpxnwlESONdg6dKlbsaMGS4EHFHtjFIDHwKOo46l4TxSuxgDzi+rE2jg+BaFruOX4HXa0Nnf1lwAPufZeF8/r6zD97WK2qFnGjBxTw5qNGPxT+5T/r7/7RawFC3j4vTp09koCxkeHjqbHJqArmH5UrFKKksnxrK7FuRIs8STfBZv+luugXZ2pR/pP9Ois4z+TiMzUUkUjD0iEi1fzX8GmXyuxUBRcaUfykV0YZnlJGKQpOiGB76x5GeWkWWJc3mOrK6S7xdND+W5N6XyaRgtWJFe13GkaZnKOsYqGdOVVVbGupsyA/l7emTLHi7vwTdirNEt0qxnzAvBFcnQF16xh/TMpUuXHDowhlA9vQVraQhkudRdzOnK+04ZSP3DUhVSP61YsaLtd/ks7ZgtPcXqPqEafHkdqa84X6aCeL7YWlv6edGFHb+ZFICPlljHhg0bKuk0CSvVznWsotRu433alNdFrqG45ejoaPCaUkWERpLXjzFL2Rpllp7PJU2a/v7Ab8N05/9t27Z16KUqoFGsxnI9EosS2niSYg9SpU6B4JgTrvVW1flt1sT+0ADIJU2maXzcUTraGCRaL1Wp9rUMk16PMom8QhruxzvZIegJjFU7LLCePfS8uaQdPny4jTTL0dbee5mYokQsXTIWNY46kuMbnt8Kmec+LGWtOVIl9cT1rCB0V8WqkjAsRwta93TbwNYoGKsUSChN44lgBNCoHLHzquYKrU6qZ8lolCIN0Rh6cP0Q3U6I6IXILYOQI513hJaSKAorFpuHXJNfVlpRtmYBk1Su1obZr5dnKAO+L10Hrj3WZW+E3qh6IszE37F6EB+68mGpvKm4eb9bFrlzrok7fvr0Kfv727dvWRmdVTJHw0qiiCUSZ6wCK+7XL/AcsgNyL74DQQ730sv78Su7+t/A36MdY0sW5o40ahslXr58aZ5HtZB8GH64m9EmMZ7FpYw4T6QnrZfgenrhFxaSiSGXtPnz57e9TkNZLvTjeqhr734CNtrK41L40sUQckmj1lGKQ0rC37x544r8eNXRpnVE3ZZY7zXo8NomiO0ZUCj2uHz58rbXoZ6gc0uA+F6ZeKS/jhRDUq8MKrTho9fEkihMmhxtBI1DxKFY9XLpVcSkfoi8JGnToZO5sU5aiDQIW716ddt7ZLYtMQlhECdBGXZZMWldY5BHm5xgAroWj4C0hbYkSc/jBmggIrXJWlZM6pSETsEPGqZOndr2uuuR5rF169a2HoHPdurUKZM4CO1WTPqaDaAd+GFGKdIQkxAn9RuEWcTRyN2KSUgiSgF5aWzPTeA/lN5rZubMmR2bE4SIC4nJoltgAV/dVefZm72AtctUCJU2CMJ327hxY9t7EHbkyJFseq+EJSY16RPo3Dkq1kkr7+q0bNmyDuLQcZBEPYmHVdOBiJyIlrRDq41YPWfXOxUysi5fvtyaj+2BpcnsUV/oSoEMOk2CQGlr4ckhBwaetBhjCwH0ZHtJROPJkyc7UjcYLDjmrH7ADTEBXFfOYmB0k9oYBOjJ8b4aOYSe7QkKcYhFlq3QYLQhSidNmtS2RATwy8YOM3EQJsUjKiaWZ+vZToUQgzhkHXudb/PW5YMHD9yZM2faPsMwoc7RciYJXbGuBqJ1UIGKKLv915jsvgtJxCZDubdXr165mzdvtr1Hz5LONA8jrUwKPqsmVesKa49S3Q4WxmRPUEYdTjgiUcfUwLx589ySJUva3oMkP6IYddq6HMS4o55xBJBUeRjzfa4Zdeg56QZ43LhxoyPo7Lf1kNt7oO8wWAbNwaYjIv5lhyS7kRf96dvm5Jah8vfvX3flyhX35cuX6HfzFHOToS1H4BenCaHvO8pr8iDuwoUL7tevX+b5ZdbBair0xkFIlFDlW4ZknEClsp/TzXyAKVOmmHWFVSbDNw1l1+4f90U6IY/q4V27dpnE9bJ+v87QEydjqx/UamVVPRG+mwkNTYN+9tjkwzEx+atCm/X9WvWtDtAb68Wy9LXa1UmvCDDIpPkyOQ5ZwSzJ4jMrvFcr0rSjOUh+GcT4LSg5ugkW1Io0/SCDQBojh0hPlaJdah+tkVYrnTZowP8iq1F1TgMBBauufyB33x1v+NWFYmT5KmppgHC+NkAgbmRkpD3yn9QIseXymoTQFGQmIOKTxiZIWpvAatenVqRVXf2nTrAWMsPnKrMZHz6bJq5jvce6QK8J1cQNgKxlJapMPdZSR64/UivS9NztpkVEdKcrs5alhhWP9NeqlfWopzhZScI6QxseegZRGeg5a8C3Re1Mfl1ScP36ddcUaMuv24iOJtz7sbUjTS4qBvKmstYJoUauiuD3k5qhyr7QdUHMeCgLa1Ear9NquemdXgmum4fvJ6w1lqsuDhNrg1qSpleJK7K3TF0Q2jSd94uSZ60kK1e3qyVpQK6PVWXp2/FC3mp6jBhKKOiY2h3gtUV64TWM6wDETRPLDfSakXmH3w8g9Jlug8ZtTt4kVF0kLUYYmCCtD/DrQ5YhMGbA9L3ucdjh0y8kOHW5gU/VEEmJTcL4Pz/f7mgoAbYkAAAAAElFTkSuQmCC"

],

"stream": false

}'Request in Raw Mode

Ollama supports raw input as well, where formatting is not applied and the input text is directly in the system prompt.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"prompt": "[INST] why is the sky blue? [/INST]",

"raw": true,

"stream": false

}'seed

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"prompt": "Why is the sky blue?",

"options": {

"seed": 123

}

}'Temperature

temperature allows the Models to produce random results, the higher the temperature, the result will be more random.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"prompt": "Why is the sky blue?",

"stream": false,

"options": {

"temperature": 0

}

}'Response in JSON

Ollama supports multiple Response formats, it allows to mention of the required format in Request. in the below request, the format is set to JSON, so Models will respond in JSON object.

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"prompt": "What color is the sky at different times of the day? Respond using JSON",

"format": "json",

"stream": false

}'Add all the parameters

Ollama Models allows setting parameters in ModelFile, but if required they can be passed dynamically as well in the API requests. we can pass multiple parameters as per the requirements, below is the list of some parameters. not all of them are mandated, they can be used as per requirement.

curl --location 'http://localhost:11434/api/generate' --header 'Content-Type: application/json' --data '{

"model": "mistral",

"prompt": "Why is the sky blue?",

"stream": false,

"options": {

"num_keep": 5,

"seed": 42,

"num_predict": 100,

"top_k": 20,

"top_p": 0.9,

"min_p": 0.0,

"tfs_z": 0.5,

"typical_p": 0.7,

"repeat_last_n": 33,

"temperature": 0.8,

"repeat_penalty": 1.2,

"presence_penalty": 1.5,

"frequency_penalty": 1.0,

"mirostat": 1,

"mirostat_tau": 0.8,

"mirostat_eta": 0.6,

"penalize_newline": true,

"stop": [

"\n",

"user:"

],

"numa": false,

"num_ctx": 1024,

"num_batch": 2,

"num_gpu": 1,

"main_gpu": 0,

"low_vram": false,

"f16_kv": true,

"vocab_only": false,

"use_mmap": true,

"use_mlock": false,

"num_thread": 8

}

}'Chat with Ollama Model

Ollama Models supports to and fro communication and chat-like conversation with a Models. The /api/generate will take requests and give responses, but we need to keep a conversation and then use Chat if available.

curl --location 'http://localhost:11434/api/chat' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "why is the sky blue?"

}

]

}'Chat with previous messages

Ollama supports chats and conversations as per the previous conversations, which will be passed in the request.

curl --location 'http://localhost:11434/api/chat' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "why is the sky blue?"

},

{

"role": "assistant",

"content": "due to Rayleigh scattering."

},

{

"role": "user",

"content": "how is that different than mie scattering?"

}

]

}'Chat about an Image

Ollama chat API supports images also, so the Models can have a conversation about the images.

curl --location 'http://localhost:11434/api/chat' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "what is in this image?",

"images": [

"iVBORw0KGgoAAAANSUhEUgAAAG0AAABmCAYAAADBPx+VAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAAXNSR0IArs4c6QAAAARnQU1BAACxjwv8YQUAAA3VSURBVHgB7Z27r0zdG8fX743i1bi1ikMoFMQloXRpKFFIqI7LH4BEQ+NWIkjQuSWCRIEoULk0gsK1kCBI0IhrQVT7tz/7zZo888yz1r7MnDl7z5xvsjkzs2fP3uu71nNfa7lkAsm7d++Sffv2JbNmzUqcc8m0adOSzZs3Z+/XES4ZckAWJEGWPiCxjsQNLWmQsWjRIpMseaxcuTKpG/7HP27I8P79e7dq1ars/yL4/v27S0ejqwv+cUOGEGGpKHR37tzJCEpHV9tnT58+dXXCJDdECBE2Ojrqjh071hpNECjx4cMHVycM1Uhbv359B2F79+51586daxN/+pyRkRFXKyRDAqxEp4yMlDDzXG1NPnnyJKkThoK0VFd1ELZu3TrzXKxKfW7dMBQ6bcuWLW2v0VlHjx41z717927ba22U9APcw7Nnz1oGEPeL3m3p2mTAYYnFmMOMXybPPXv2bNIPpFZr1NHn4HMw0KRBjg9NuRw95s8PEcz/6DZELQd/09C9QGq5RsmSRybqkwHGjh07OsJSsYYm3ijPpyHzoiacg35MLdDSIS/O1yM778jOTwYUkKNHWUzUWaOsylE00MyI0fcnOwIdjvtNdW/HZwNLGg+sR1kMepSNJXmIwxBZiG8tDTpEZzKg0GItNsosY8USkxDhD0Rinuiko2gfL/RbiD2LZAjU9zKQJj8RDR0vJBR1/Phx9+PHj9Z7REF4nTZkxzX4LCXHrV271qXkBAPGfP/atWvu/PnzHe4C97F48eIsRLZ9+3a3f/9+87dwP1JxaF7/3r17ba+5l4EcaVo0lj3SBq5kGTJSQmLWMjgYNei2GPT1MuMqGTDEFHzeQSP2wi/jGnkmPJ/nhccs44jvDAxpVcxnq0F6eT8h4ni/iIWpR5lPyA6ETkNXoSukvpJAD3AsXLiwpZs49+fPn5ke4j10TqYvegSfn0OnafC+Tv9ooA/JPkgQysqQNBzagXY55nO/oa1F7qvIPWkRL12WRpMWUvpVDYmxAPehxWSe8ZEXL20sadYIozfmNch4QJPAfeJgW3rNsnzphBKNJM2KKODo1rVOMRYik5ETy3ix4qWNI81qAAirizgMIc+yhTytx0JWZuNI03qsrgWlGtwjoS9XwgUhWGyhUaRZZQNNIEwCiXD16tXcAHUs79co0vSD8rrJCIW98pzvxpAWyyo3HYwqS0+H0BjStClcZJT5coMm6D2LOF8TolGJtK9fvyZpyiC5ePFi9nc/oJU4eiEP0jVoAnHa9wyJycITMP78+eMeP37sXrx44d6+fdt6f82aNdkx1pg9e3Zb5W+RSRE+n+VjksQWifvVaTKFhn5O8my63K8Qabdv33b379/PiAP//vuvW7BggZszZ072/+TJk91YgkafPn166zXB1rQHFvouAWHq9z3SEevSUerqCn2/dDCeta2jxYbr69evk4MHDyY7d+7MjhMnTiTPnz9Pfv/+nfQT2ggpO2dMF8cghuoM7Ygj5iWCqRlGFml0QC/ftGmTmzt3rmsaKDsgBSPh0/8yPeLLBihLkOKJc0jp8H8vUzcxIA1k6QJ/c78tWEyj5P3o4u9+jywNPdJi5rAH9x0KHcl4Hg570eQp3+vHXGyrmEeigzQsQsjavXt38ujRo44LQuDDhw+TW7duRS1HGgMxhNXHgflaNTOsHyKvHK5Ijo2jbFjJBQK9YwFd6RVMzfgRBmEfP37suBBm/p49e1qjEP2mwTViNRo0VJWH1deMXcNK08uUjVUu7s/zRaL+oLNxz1bpANco4npUgX4G2eFbpDFyQoQxojBCpEGSytmOH8qrH5Q9vuzD6ofQylkCUmh8DBAr+q8JCyVNtWQIidKQE9wNtLSQnS4jDSsxNHogzFuQBw4cyM61UKVsjfr3ooBkPSqqQHesUPWVtzi9/vQi1T+rJj7WiTz4Pt/l3LxUkr5P2VYZaZ4URpsE+st/dujQoaBBYokbrz/8TJNQYLSonrPS9kUaSkPeZyj1AWSj+d+VBoy1pIWVNed8P0Ll/ee5HdGRhrHhR5GGN0r4LGZBaj8oFDJitBTJzIZgFcmU0Y8ytWMZMzJOaXUSrUs5RxKnrxmbb5YXO9VGUhtpXldhEUogFr3IzIsvlpmdosVcGVGXFWp2oU9kLFL3dEkSz6NHEY1sjSRdIuDFWEhd8KxFqsRi1uM/nz9/zpxnwlESONdg6dKlbsaMGS4EHFHtjFIDHwKOo46l4TxSuxgDzi+rE2jg+BaFruOX4HXa0Nnf1lwAPufZeF8/r6zD97WK2qFnGjBxTw5qNGPxT+5T/r7/7RawFC3j4vTp09koCxkeHjqbHJqArmH5UrFKKksnxrK7FuRIs8STfBZv+luugXZ2pR/pP9Ois4z+TiMzUUkUjD0iEi1fzX8GmXyuxUBRcaUfykV0YZnlJGKQpOiGB76x5GeWkWWJc3mOrK6S7xdND+W5N6XyaRgtWJFe13GkaZnKOsYqGdOVVVbGupsyA/l7emTLHi7vwTdirNEt0qxnzAvBFcnQF16xh/TMpUuXHDowhlA9vQVraQhkudRdzOnK+04ZSP3DUhVSP61YsaLtd/ks7ZgtPcXqPqEafHkdqa84X6aCeL7YWlv6edGFHb+ZFICPlljHhg0bKuk0CSvVznWsotRu433alNdFrqG45ejoaPCaUkWERpLXjzFL2Rpllp7PJU2a/v7Ab8N05/9t27Z16KUqoFGsxnI9EosS2niSYg9SpU6B4JgTrvVW1flt1sT+0ADIJU2maXzcUTraGCRaL1Wp9rUMk16PMom8QhruxzvZIegJjFU7LLCePfS8uaQdPny4jTTL0dbee5mYokQsXTIWNY46kuMbnt8Kmec+LGWtOVIl9cT1rCB0V8WqkjAsRwta93TbwNYoGKsUSChN44lgBNCoHLHzquYKrU6qZ8lolCIN0Rh6cP0Q3U6I6IXILYOQI513hJaSKAorFpuHXJNfVlpRtmYBk1Su1obZr5dnKAO+L10Hrj3WZW+E3qh6IszE37F6EB+68mGpvKm4eb9bFrlzrok7fvr0Kfv727dvWRmdVTJHw0qiiCUSZ6wCK+7XL/AcsgNyL74DQQ730sv78Su7+t/A36MdY0sW5o40ahslXr58aZ5HtZB8GH64m9EmMZ7FpYw4T6QnrZfgenrhFxaSiSGXtPnz57e9TkNZLvTjeqhr734CNtrK41L40sUQckmj1lGKQ0rC37x544r8eNXRpnVE3ZZY7zXo8NomiO0ZUCj2uHz58rbXoZ6gc0uA+F6ZeKS/jhRDUq8MKrTho9fEkihMmhxtBI1DxKFY9XLpVcSkfoi8JGnToZO5sU5aiDQIW716ddt7ZLYtMQlhECdBGXZZMWldY5BHm5xgAroWj4C0hbYkSc/jBmggIrXJWlZM6pSETsEPGqZOndr2uuuR5rF169a2HoHPdurUKZM4CO1WTPqaDaAd+GFGKdIQkxAn9RuEWcTRyN2KSUgiSgF5aWzPTeA/lN5rZubMmR2bE4SIC4nJoltgAV/dVefZm72AtctUCJU2CMJ327hxY9t7EHbkyJFseq+EJSY16RPo3Dkq1kkr7+q0bNmyDuLQcZBEPYmHVdOBiJyIlrRDq41YPWfXOxUysi5fvtyaj+2BpcnsUV/oSoEMOk2CQGlr4ckhBwaetBhjCwH0ZHtJROPJkyc7UjcYLDjmrH7ADTEBXFfOYmB0k9oYBOjJ8b4aOYSe7QkKcYhFlq3QYLQhSidNmtS2RATwy8YOM3EQJsUjKiaWZ+vZToUQgzhkHXudb/PW5YMHD9yZM2faPsMwoc7RciYJXbGuBqJ1UIGKKLv915jsvgtJxCZDubdXr165mzdvtr1Hz5LONA8jrUwKPqsmVesKa49S3Q4WxmRPUEYdTjgiUcfUwLx589ySJUva3oMkP6IYddq6HMS4o55xBJBUeRjzfa4Zdeg56QZ43LhxoyPo7Lf1kNt7oO8wWAbNwaYjIv5lhyS7kRf96dvm5Jah8vfvX3flyhX35cuX6HfzFHOToS1H4BenCaHvO8pr8iDuwoUL7tevX+b5ZdbBair0xkFIlFDlW4ZknEClsp/TzXyAKVOmmHWFVSbDNw1l1+4f90U6IY/q4V27dpnE9bJ+v87QEydjqx/UamVVPRG+mwkNTYN+9tjkwzEx+atCm/X9WvWtDtAb68Wy9LXa1UmvCDDIpPkyOQ5ZwSzJ4jMrvFcr0rSjOUh+GcT4LSg5ugkW1Io0/SCDQBojh0hPlaJdah+tkVYrnTZowP8iq1F1TgMBBauufyB33x1v+NWFYmT5KmppgHC+NkAgbmRkpD3yn9QIseXymoTQFGQmIOKTxiZIWpvAatenVqRVXf2nTrAWMsPnKrMZHz6bJq5jvce6QK8J1cQNgKxlJapMPdZSR64/UivS9NztpkVEdKcrs5alhhWP9NeqlfWopzhZScI6QxseegZRGeg5a8C3Re1Mfl1ScP36ddcUaMuv24iOJtz7sbUjTS4qBvKmstYJoUauiuD3k5qhyr7QdUHMeCgLa1Ear9NquemdXgmum4fvJ6w1lqsuDhNrg1qSpleJK7K3TF0Q2jSd94uSZ60kK1e3qyVpQK6PVWXp2/FC3mp6jBhKKOiY2h3gtUV64TWM6wDETRPLDfSakXmH3w8g9Jlug8ZtTt4kVF0kLUYYmCCtD/DrQ5YhMGbA9L3ucdjh0y8kOHW5gU/VEEmJTcL4Pz/f7mgoAbYkAAAAAElFTkSuQmCC"

]

}

]

}'Chat request with Reproducible outputs

curl --location 'http://localhost:11434/api/chat' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "Hello!"

}

],

"options": {

"seed": 101,

"temperature": 0

}

}'Chat with Tools

Ollama Models have access to different tools, which are specialized in specific tasks. The prompt can use the combined response of all the available tools.

curl --location 'http://localhost:11434/api/chat' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "What is the weather today in Paris?"

}

],

"stream": false,

"tools": [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather for a location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The location to get the weather for, e.g. San Francisco, CA"

},

"format": {

"type": "string",

"description": "The format to return the weather in, e.g. '\''celsius'\'' or '\''fahrenheit'\''",

"enum": [

"celsius",

"fahrenheit"

]

}

},

"required": [

"location",

"format"

]

}

}

}

]

}'

Generate embeddings

Embedding are 3-dimensional vector stored location of an entity, Ollama provides an API that returns the embedding of a text.

curl --location 'http://localhost:11434/api/embed' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"input": "Why is the sky blue?"

}'Embeddings for multiple inputs

Ollama can provide embeddings of multiple request prompts at once.

curl --location 'http://localhost:11434/api/embed' \

--header 'Content-Type: application/json' \

--data '{

"model": "mistral",

"input": [

"Why is the sky blue?",

"Why is the grass green?"

]

}'