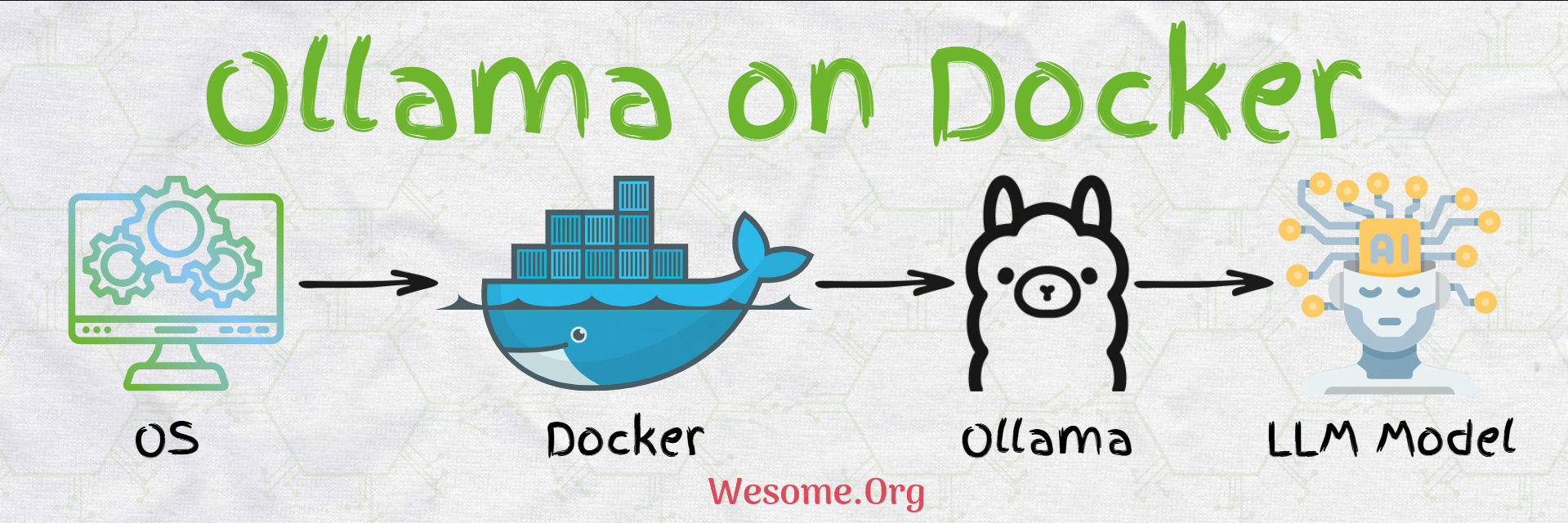

Ollama can be easily downloaded and installed on all major OS platforms, such as Linux, Macintosh, and Windows. The official download link provides more information about the installation process. We will install Ollama on Docker for our tutorial, which is common for all OS platforms.

The latest Ollama Docker image can be found on the official link to the Ollama Docker. The Ollama Docker Image provides a platform for large language models (LLM) to run. Ollama has a library of various models suitable for different tasks. A list of all available Ollama Models can be found on the official website of the Ollama Model Library. In this tutorial, we will be using the latest Mistral.

Ollama Mistral Model is the default model in Spring Boot Ai

To run the Ollama Mistral Model, execute the below docker-compose file.

services:

ollama-model:

image: ollama/ollama:latest

container_name: ollama_container

ports:

- 11434:11434/tcp

healthcheck:

test: ollama --version || exit 1

command: serve

volumes:

- ./ollama/ollama:/root/.ollama

- ./entrypoint.sh:/entrypoint.sh

pull_policy: missing

tty: true

restart: no

entrypoint: [ "/usr/bin/bash", "/entrypoint.sh" ]

#!/bin/bash

# Start Ollama in the background.

/bin/ollama serve &

# Record Process ID.

pid=$!

# Pause for Ollama to start.

sleep 5

echo "🔴 Retrieve mistral model..."

ollama pull mistral

echo "🟢 Done!"

# Wait for the Ollama process to finish.

wait $pidexecute docker-compose up

The LLM Model is running can be validated by executing a query docker exec -it ollama_container Ollama list